Support us

Support our activities and help children and adults in need. For 15 years we have been helping children and adults solve their problems online. Get involved too! Every help counts! Just click on the DONATE button.Other articles

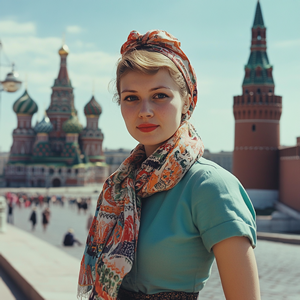

Perhaps you have also come across these pages on Facebook - a beautiful photograph of a foreign city labeled as Moscow, Sevastopol, or another Russian city. In reality, it is a completely different place - perhaps from Spain, Brazil, the USA, or the UK - and the label and description are entirely fabricated. Similarly, photos of beautiful women in military uniforms create the impression that they are members of the Russian army. But... appearances can be deceiving; it is actually a hoax.

Perhaps you have also come across these pages on Facebook - a beautiful photograph of a foreign city labeled as Moscow, Sevastopol, or another Russian city. In reality, it is a completely different place - perhaps from Spain, Brazil, the USA, or the UK - and the label and description are entirely fabricated. Similarly, photos of beautiful women in military uniforms create the impression that they are members of the Russian army. But... appearances can be deceiving; it is actually a hoax.

One of the key features of large language models of generative artificial intelligence is their ability to converse with us through natural human language. This naturally leads us to personify these AI tools and attribute human traits to them – it becomes our virtual assistant, persona, friend, helper, and in many cases, even companion. AI conversational tools compete effectively with living people, raising the question of whether in the near future they might largely replace human conversation – after all, AI does not argue, apologizes, avoids conflict, does not explode with emotions, motivates us, praises us, is not aggressive, and we don’t have to apologize to it, among other things.

One of the key features of large language models of generative artificial intelligence is their ability to converse with us through natural human language. This naturally leads us to personify these AI tools and attribute human traits to them – it becomes our virtual assistant, persona, friend, helper, and in many cases, even companion. AI conversational tools compete effectively with living people, raising the question of whether in the near future they might largely replace human conversation – after all, AI does not argue, apologizes, avoids conflict, does not explode with emotions, motivates us, praises us, is not aggressive, and we don’t have to apologize to it, among other things.

Recently, artificial intelligence, especially tools like ChatGPT, Gemini, and Copilot, have become not only the focus of interest for technology enthusiasts but also the target of scammers who exploit its popularity to spread malware and phishing. This trend is worrying, as the increasing interest in AI also increases the number of people who fall for false promises of easy profit or problem-solving using these advanced technologies.

Recently, artificial intelligence, especially tools like ChatGPT, Gemini, and Copilot, have become not only the focus of interest for technology enthusiasts but also the target of scammers who exploit its popularity to spread malware and phishing. This trend is worrying, as the increasing interest in AI also increases the number of people who fall for false promises of easy profit or problem-solving using these advanced technologies.

In today's digital world, manipulative techniques are becoming an increasingly common part of public communication, particularly in pre-election campaigns and often fraudulent advertising. The E-Bezpečí team from the Faculty of Education, Palacký University in Olomouc, is offering an innovative solution – the FactNinja application. This unique tool, powered by the advanced GPT-4 Omni artificial intelligence model, allows for fast and efficient analysis of graphical content to uncover manipulative techniques, argumentative fallacies, and other unethical practices aimed at influencing public opinion.

In today's digital world, manipulative techniques are becoming an increasingly common part of public communication, particularly in pre-election campaigns and often fraudulent advertising. The E-Bezpečí team from the Faculty of Education, Palacký University in Olomouc, is offering an innovative solution – the FactNinja application. This unique tool, powered by the advanced GPT-4 Omni artificial intelligence model, allows for fast and efficient analysis of graphical content to uncover manipulative techniques, argumentative fallacies, and other unethical practices aimed at influencing public opinion.

Donald Trump, the former President of the United States, is once again facing criticism for using artificial intelligence in his election campaign. After the discovery of fake AI-generated photos allegedly supporting Trump from Taylor Swift fans and Black voters, concerns about the spread of disinformation and manipulation of public opinion have increased. This is something that will need to be considered in the future as the misuse of artificial intelligence will increasingly affect democratic processes.

Donald Trump, the former President of the United States, is once again facing criticism for using artificial intelligence in his election campaign. After the discovery of fake AI-generated photos allegedly supporting Trump from Taylor Swift fans and Black voters, concerns about the spread of disinformation and manipulation of public opinion have increased. This is something that will need to be considered in the future as the misuse of artificial intelligence will increasingly affect democratic processes.

Ocenění projektu E-Bezpečí

KYBER Cena 2023

(1. místo)

Nejlepší projekt prevence kriminality na místní úrovni 2023

(1. místo)

Evropská cena prevence kriminality 2015

(1. místo)

KYBER Cena 2023

(1. místo)

Nejlepší projekt prevence kriminality na místní úrovni 2023

(1. místo)

Evropská cena prevence kriminality 2015

(1. místo)

E-BEZPEČÍ: AI CHATBOT

xAhoj, jsem chatovací robot projektu E-Bezpečí a mohu ti pomoci zodpovědět základní otázky a vyřešit tvé problémy. Zvol si z nabídky, nebo svůj dotaz napiš přímo do chatu.

MÁM PROBLÉM

ZAJÍMÁ MĚ VZDĚLÁVÁNÍ

ZAJÍMAJÍ MĚ TISKOVINY