Generative artificial intelligence has many positives (which we address, for example, on our specialized pages), but on the other hand, we must not ignore the risks and problems that this technology brings. For example, in recent months, more and more photos and videos created with the help of generative artificial intelligence have appeared in the public space. Many of them depict famous personalities – without their consent and with the aim of discrediting them or using them, for example, for fraudulent activities. This also affects ordinary internet users, such as teenage girls who have become targets of so-called undressing applications.

Generative artificial intelligence has many positives (which we address, for example, on our specialized pages), but on the other hand, we must not ignore the risks and problems that this technology brings. For example, in recent months, more and more photos and videos created with the help of generative artificial intelligence have appeared in the public space. Many of them depict famous personalities – without their consent and with the aim of discrediting them or using them, for example, for fraudulent activities. This also affects ordinary internet users, such as teenage girls who have become targets of so-called undressing applications.

Generated Pornography and the Case of Taylor Swift

One of the serious problems associated with generative artificial intelligence is the creation of sexually explicit (often pornographic) photos/videos of famous personalities – actresses, singers, but also, for example, politicians. However, it does not have to be celebrities at all – anyone can become a target. Creating these materials is extremely easy – there are many applications that allow this, and new ones are being developed every day.

Especially dangerous are the so-called deep fake technologies. These technologies, using advanced machine learning algorithms, allow the creation of extremely realistic videos and audio recordings in which faces, bodies, and voices of people can be manipulated. Naturally, generating photos of specific people in various situations (including sexually explicit ones) in a matter of seconds is possible.

For example, singer Taylor Swift has had recent experience with AI-generated pornography. On Wednesday, January 24, 2024, sexually explicit deepfake photos of her created using artificial intelligence spread on the X network (formerly Twitter), garnering over 27 million views and more than 260,000 likes within 19 hours before the account that posted the images was suspended. Deepfakes showing Swift naked and in sexual scenes continue to spread on the X network – now also outside of this network.

Sexually explicit image of Taylor Swift generated by AI (cropped)

However, this problem does not only affect famous personalities; girls from all over the world report issues with generated pornography misusing their identities. In the United States, for example, dozens of high school-aged girls have reported being victims of deepfakes. Similar cases have previously occurred in the UK – a doctored video featuring porn actresses whose faces were replaced with those of teenage girls contributed to the suicide of a 14-year-old girl. Similar cases have also been reported from Spain and the Czech Republic, which we informed about on our portal as early as October last year. Thus, our warning that AI-generated pornography depicting real people would be a serious problem in the future has been fully realized. The future is now!

Generation of Deep Fake Videos of Politicians and Disruption of Democratic Society – Including Elections

One of the issues that deep fake videos bring is the disruption of trust in public institutions and political processes and the influencing of democratic processes (e.g., elections). Falsified videos/audio recordings depicting politicians in compromising or controversial situations can be easily disseminated through social networks and other media. These videos/audio recordings are often so convincing that it is difficult for the average viewer to recognize their falseness. As a result, disinformation can spread, potentially influencing public opinion and elections.

For example, Slovakia has had recent experience with election interference using these technologies – just before the elections, a falsified recording of a phone conversation about manipulating election results between the chairman of Progressive Slovakia running for the National Council of the Slovak Republic and journalist Monika Tódová began to spread. However, the conversation never took place; it was all created by artificial intelligence. Both the Slovak police and the AFP fact-checking agency labeled the recording as fake. The fake recording spread virally across the internet, clearly influencing some voters.

We also have experience with fraudulent videos in the Czech Republic; for example, during the presidential elections in 2023, an altered video of presidential candidate Petr Pavel circulated online.

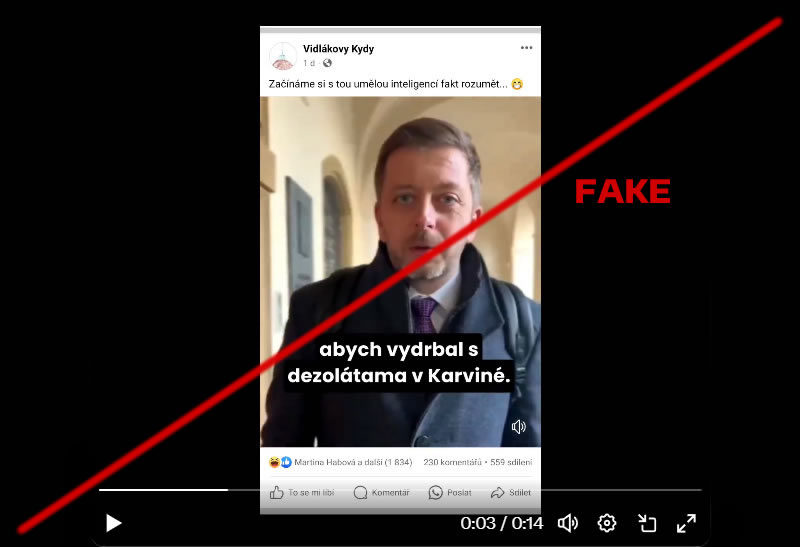

Currently, a fraudulent deep fake video targeting Interior Minister Vít Rakušan is circulating online.

Deep fake video targeting Minister Rakušan

Generative Artificial Intelligence and Fraud

Generative artificial intelligence tools can also be misused for fraudulent activities – for example, generating phishing or blackmail emails. These tools can also generate disinformation content. While we have become accustomed to textual frauds and are not easily influenced by them, we have not yet gotten used to deep fake videos, which are becoming more common in online frauds.

Typical examples of frauds using deep fake videos of famous personalities are various types of “fraudulent investment ads” that promise huge profits for minimal investment. The fraud is then supplemented with deep fake videos of famous personalities (former Prime Minister Andrej Babiš, Žantovský, Tykač, etc.) confirming how great and guaranteed the investment is. Additionally, logos of the ČEZ group, the Office of the Government of the Czech Republic, or the CNN Prima News station are used. These videos can indeed persuade some internet users, who lack sufficient media literacy, to believe the message and invest in fraudulent products. They are likely to be scammed and lose their money.

Screenshot of a fraudulent deep fake video using

Andrej Babiš and the CNN Prima News brand

Get Used to It, It Will Get Worse

Given the availability of generative artificial intelligence tools that allow the creation of the problematic content mentioned above, we must expect such cases to increase. We must prepare for the fact that human likeness can be easily faked and that it is easy to mimic a person's appearance (their facial expressions, gestures) as well as their voice. AI tools have the potential to radically transform the way we perceive digital content, bringing a range of ethical and legal dilemmas. Will this type of digital content be detectable? Will it be regulated? What will it do to people? What will we trust in the future? How will we protect human identity? These are all questions we need to find answers to very quickly…

For E-Bezpečí

Kamil Kopecký

Palacký University in Olomouc

Sources:

https://www.facebook.com/policiaslovakia/posts/706246141540957?ref=embed_post